Isolation and Observability: Vetting AI Browsers from Google, Microsoft, and the Edge

By JoeVu, at: Oct. 26, 2025, 4:38 p.m.

Estimated Reading Time: __READING_TIME__ minutes

The arrival of AI agents and smart browsers like ChatGPT Atlas, Google’s Gemini in Chrome, and Microsoft’s Copilot has thrust a new and critical challenge onto enterprise security teams. The convenience of an AI that can read, summarize, and act across authenticated corporate tabs presents a massive new vector for data loss.

Choosing an AI browser platform is not about feature parity; it’s about data governance and security architecture. The following sections compare leading vendor strategies and outline the non-negotiable technical requirements for enterprise selection.

The Enterprise Service Boundary: Google's Gemini Approach

Google, a major player in the AI browser space with its integration of Gemini into Chrome and Workspace, has publicly committed to a clear Enterprise Service Boundary to protect corporate data.

Their core promise is data isolation: Enterprise content including all user prompts, the context from Workspace documents (like Docs, Sheets, and Gmail), and the AI’s responses is strictly confined to the organization’s domain. Critically, this content is not used to train Google's public, general-purpose LLM models unless the organization explicitly opts in.

This commitment is designed to give corporate clients confidence that their intellectual property (IP) and sensitive communications will not inadvertently become part of the publicly accessible LLM training data, thus maintaining the integrity and confidentiality of their internal operations.

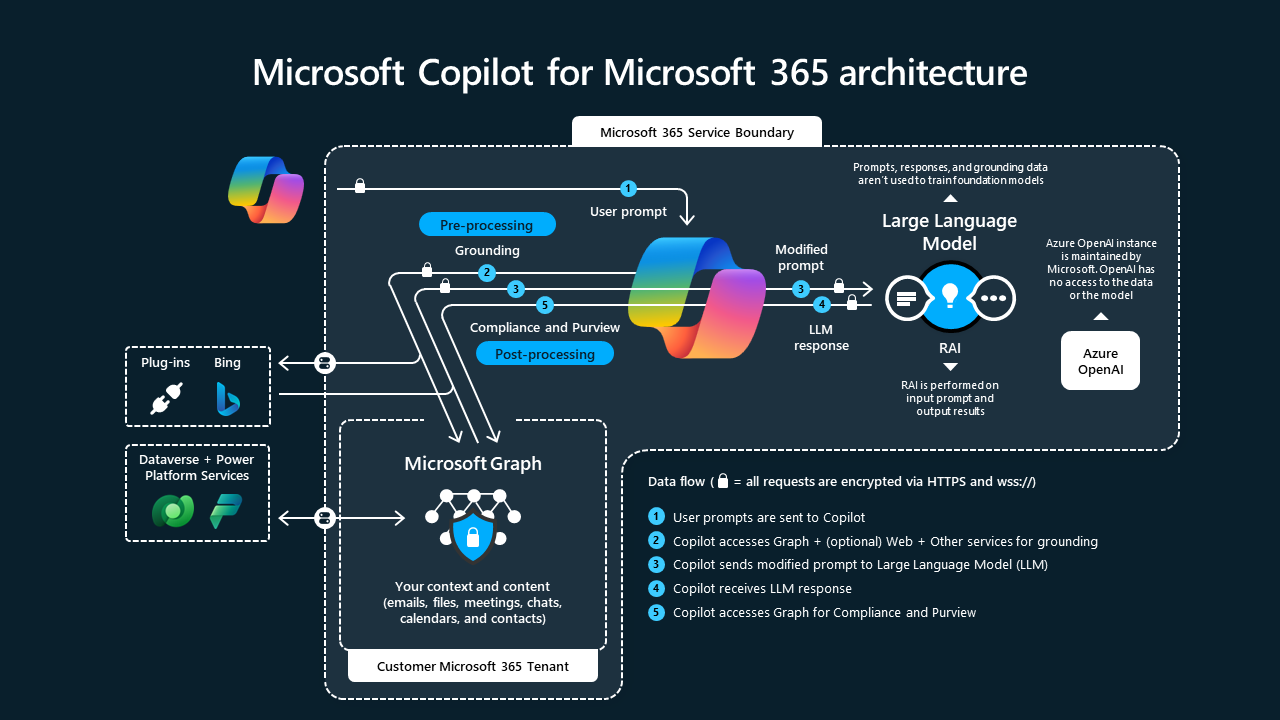

Microsoft Copilot's Data Isolation Commitment

Microsoft, with its Copilot integration across M365, has mirrored and aggressively marketed a similar data isolation strategy.

Microsoft's assurance is that prompts and the subsequent AI-generated responses are processed entirely within the M365 service boundary. This means the AI interactions automatically inherit the organization’s existing enterprise security and compliance controls (such as Azure Information Protection and eDiscovery).

Specifically, Microsoft explicitly states that this commercial data is not used to train their foundational AI models. By strictly confining the interaction to the tenant's trusted environment, they aim to seamlessly extend the enterprise's pre-existing security perimeter to the new AI features, minimizing the surface area for corporate IP leakage.

The Core Requirement: Isolation

These vendor strategies underscore a fundamental truth: The Enterprise Service Boundary is the single most critical factor for AI browser selection.

In an environment where a simple language command can bridge data across authenticated applications, the risk of corporate IP leakage into general-purpose LLM training sets is existential. If an AI agent handling proprietary data from a CRM system simultaneously informs the public model, that data is permanently compromised.

Therefore, for all internal work, from summarizing meeting notes to drafting confidential emails, a mandatory requirement is isolation. The platform must demonstrably mandate and enforce that all internal content must be confined to the enterprise’s domain and explicitly excluded from the model provider’s public training sets. This is the only way to mitigate the risk of turning a convenient tool into a catastrophic IP drain.

The Agentic Challenger: Perplexity Comet's Enterprise Posture

While Google and Microsoft extend existing enterprise ecosystem security, Perplexity Comet enters the market as a dedicated, AI-first browser whose core feature is agentic capability (the ability to perform autonomous, multi-step tasks across the web). This difference in design necessitates separate scrutiny.

Perplexity's Enterprise offering makes robust data isolation claims:

-

Non-Use of Enterprise Data for Training: Like its larger competitors, Perplexity states that enterprise customer data is never used to train or fine-tune their LLM models (including those from third-party partners).

-

Compliance and Security: The platform touts SOC 2 Type II, GDPR, and HIPAA compliance, along with features like Single Sign-On (SSO), user management, and the collection of audit logs, positioning itself as a legitimate enterprise contender.

However, the agentic nature introduces unique vectors. Recent security research has highlighted vulnerabilities in AI browsers, including Comet, related to indirect prompt injection through cleverly disguised elements (like hidden text in screenshots or malicious URLs). This emphasizes that while the formal data isolation policy may be sound, the underlying attack surface area of a highly autonomous agent is larger and must be continuously validated.

Emerging Solutions: Isolated, Headless Browsing

Beyond the major players, specialized solutions are emerging that place architectural isolation at the forefront of their defense strategy. Platforms like Browserbase utilize isolated, headless browser instances (browsers that run in the background without a visible user interface) for executing AI-driven tasks.

This architecture treats every AI task as a fresh, sandboxed session, providing maximal security and compliance. The entire operation, from visiting a page to running the AI script, is confined to a temporary, non-persistent, and fully isolated environment. For industries under strict regulation (e.g., those requiring SOC-2 or HIPAA compliance), this architectural isolation is often a necessary primary defense against unknown zero-day attacks and complex prompt injection vectors.

The Non-Negotiable: Full Observability

The final technical requirement, regardless of the vendor, is Full Observability.

Since stealthy prompt injection attacks look like benign user actions, detection relies entirely on a comprehensive forensic trail. Full observability must include:

-

Live View iFrames: The ability for security teams to view the AI agent’s browser session in real-time or playback exactly what the agent "saw" and "did."

-

Full Session Recording: A complete, high-fidelity log of the entire AI-driven workflow, including all page interactions.

-

Command Logging: A detailed audit trail that logs every instruction the AI received (including hidden, indirect prompts) and every action it performed (e.g., "Clicked 'Send Email'," "Copied data to clipboard").

This level of auditable AI actions and command logging is essential for threat detection and incident response. Without it, an Indirect Prompt Injection attack, which executes and exfiltrates data in milliseconds, will be completely invisible, leaving the security team blind to the method and extent of the data breach. The AI must not only operate within the service boundary but must also be transparent enough to be monitored at the command level.