When Redis Memory Ran Out: Lessons from a Real Production Outage

By hientd, at: Sept. 5, 2025, 5:11 p.m.

Estimated Reading Time: __READING_TIME__ minutes

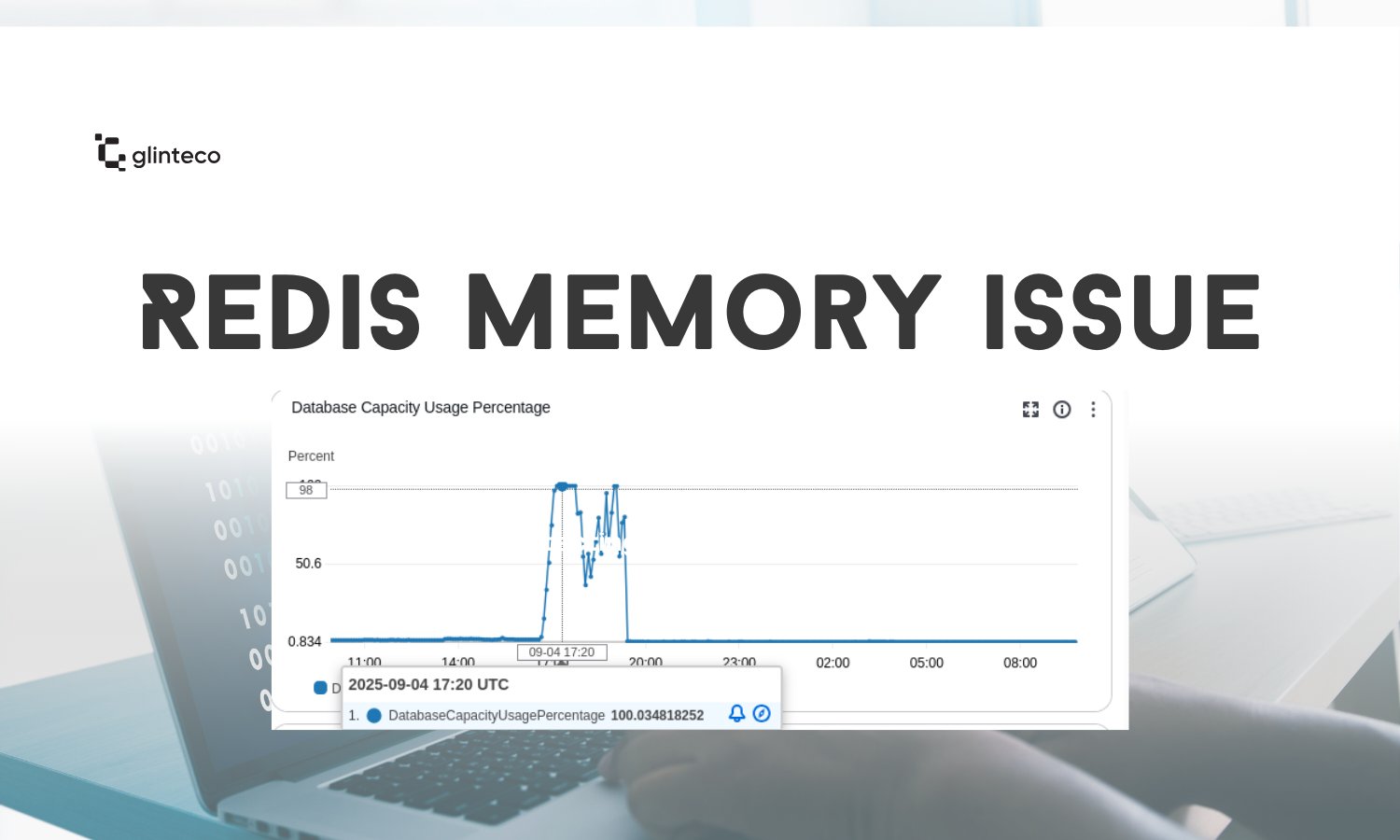

Sometimes, production systems fail in ways you don’t expect even with monitoring and scaling in place. This week, our team faced a critical issue that took down our production servers twice in two days. The root cause? Redis memory exhaustion.

What made this incident interesting is that Redis wasn’t failing because of the usual suspects (like long-lived keys or missing eviction policies). Instead, the culprit was hidden in the way our Celery tasks interacted with Redis under heavy load.

What Happened

-

During peak traffic, multiple clients uploaded large files at the same time.

-

Each upload triggered a background calculation through Celery.

-

At peak, we saw up to 10,000 requests/second.

Our custom Celery task base class was designed to rate-limit tasks by writing lock keys into Redis:

self.first_task_at_lock_key = f"{base_task_id}-first_task_at"

self.count_tasks_lock_key = f"{base_task_id}-count_tasks"

The problem?

When the input data (sale_orders) was huge, these generated keys could reach 10 KB each. With two keys per task, Redis ended up storing ~20 KB per task.

At scale:

-

1,000 tasks = ~20 MB of Redis memory

-

With thousands of tasks enqueued every second, memory usage grew uncontrollably until Redis refused writes:

redis.exceptions.ResponseError:

OOM command not allowed when used memory > 'maxmemory'

And just like that, our production servers crashed. The trace log is below:

django_redis.exceptions.ConnectionInterrupted: Redis ResponseError: OOM command not allowed when used memory > 'maxmemory'.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "venv/lib/python3.8/site-packages/django/core/handlers/exception.py", line 47, in inner

response = get_response(request)

File "venv/lib/python3.8/site-packages/django/core/handlers/base.py", line 181, in _get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

File "venv/lib/python3.8/site-packages/sentry_sdk/integrations/django/views.py", line 67, in sentry_wrapped_callback

return callback(request, *args, **kwargs)

File "venv/lib/python3.8/site-packages/django/views/decorators/csrf.py", line 54, in wrapped_view

return view_func(*args, **kwargs)

File "venv/lib/python3.8/site-packages/django/views/generic/base.py", line 70, in view

return self.dispatch(request, *args, **kwargs)

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 509, in dispatch

response = self.handle_exception(exc)

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 469, in handle_exception

self.raise_uncaught_exception(exc)

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 480, in raise_uncaught_exception

raise exc

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 497, in dispatch

self.initial(request, *args, **kwargs)

File "venv/lib/python3.8/site-packages/sentry_sdk/integrations/django/__init__.py", line 270, in sentry_patched_drf_initial

return old_drf_initial(self, request, *args, **kwargs)

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 416, in initial

self.check_throttles(request)

File "venv/lib/python3.8/site-packages/rest_framework/views.py", line 359, in check_throttles

if not throttle.allow_request(request, self):

File "venv/lib/python3.8/site-packages/rest_framework/throttling.py", line 132, in allow_request

return self.throttle_success()

File "venv/lib/python3.8/site-packages/rest_framework/throttling.py", line 140, in throttle_success

self.cache.set(self.key, self.history, self.duration)

File "venv/lib/python3.8/site-packages/django_redis/cache.py", line 38, in _decorator

raise e.__cause__

File "venv/lib/python3.8/site-packages/django_redis/client/default.py", line 175, in set

return bool(client.set(nkey, nvalue, nx=nx, px=timeout, xx=xx))

File "venv/lib/python3.8/site-packages/redis/client.py", line 1801, in set

return self.execute_command('SET', *pieces)

File "venv/lib/python3.8/site-packages/redis/client.py", line 901, in execute_command

return self.parse_response(conn, command_name, **options)

File "venv/lib/python3.8/site-packages/redis/client.py", line 915, in parse_response

response = connection.read_response()

File "venv/lib/python3.8/site-packages/redis/connection.py", line 756, in read_response

raise response

redis.exceptions.ResponseError: OOM command not allowed when used memory > 'maxmemory'.

Root Cause

-

Keys were too large, because raw data was directly passed into the Celery task.

-

Redis was effectively being used as a cache + task payload storage, which it isn’t optimized for.

-

High concurrency amplified the issue, pushing Redis beyond its configured memory limits.

The Fix

We changed our queueing strategy:

Instead of passing raw data directly into tasks, we:

-

Store the raw data in the database.

-

Pass only a lightweight ID + hash into the Celery task.

This reduced key sizes from ~10 KB → <100 bytes.

The Results

-

Peak memory usage dropped from 20 MB/s → ~0.2 MB/s (a 99% reduction).

-

Redis stability was restored.

-

Our servers ran smoothly even during simultaneous large file uploads.

Key Takeaways

-

Beware of hidden payloads: Passing large data into Celery tasks may look harmless until it scales.

-

Redis isn’t a data warehouse: Use it for lightweight keys/values, not for big payload storage.

-

Always measure memory impact: A few KB per key is fine… until you multiply it by thousands of tasks/second.

-

Design for scale early: Even “small” inefficiencies can cause major outages under real-world traffic.

Final Thoughts

Incidents like these are painful but valuable. They force us to revisit assumptions, improve architecture, and build more resilient systems. In our case, this was a reminder that efficiency in background task design is just as important as business logic correctness.

We hope sharing this helps others avoid a similar outage.