Xây dựng công cụ thu thập dữ liệu việc làm: Tổng hợp dữ liệu tuyển dụng

By khoanc, at: 15:32 Ngày 01 tháng 3 năm 2023

Thời gian đọc ước tính: __READING_TIME__ phút

Trong thị trường việc làm hiện nay, thu thập dữ liệu việc làm từ nhiều nguồn khác nhau có thể cung cấp những thông tin có giá trị và cơ hội cho người tìm việc. Một trình thu thập thông tin việc làm có thể tự động thu thập các bài đăng tuyển dụng từ nhiều trang web khác nhau, giúp bạn tạo ra một cơ sở dữ liệu tập trung về các cơ hội việc làm.

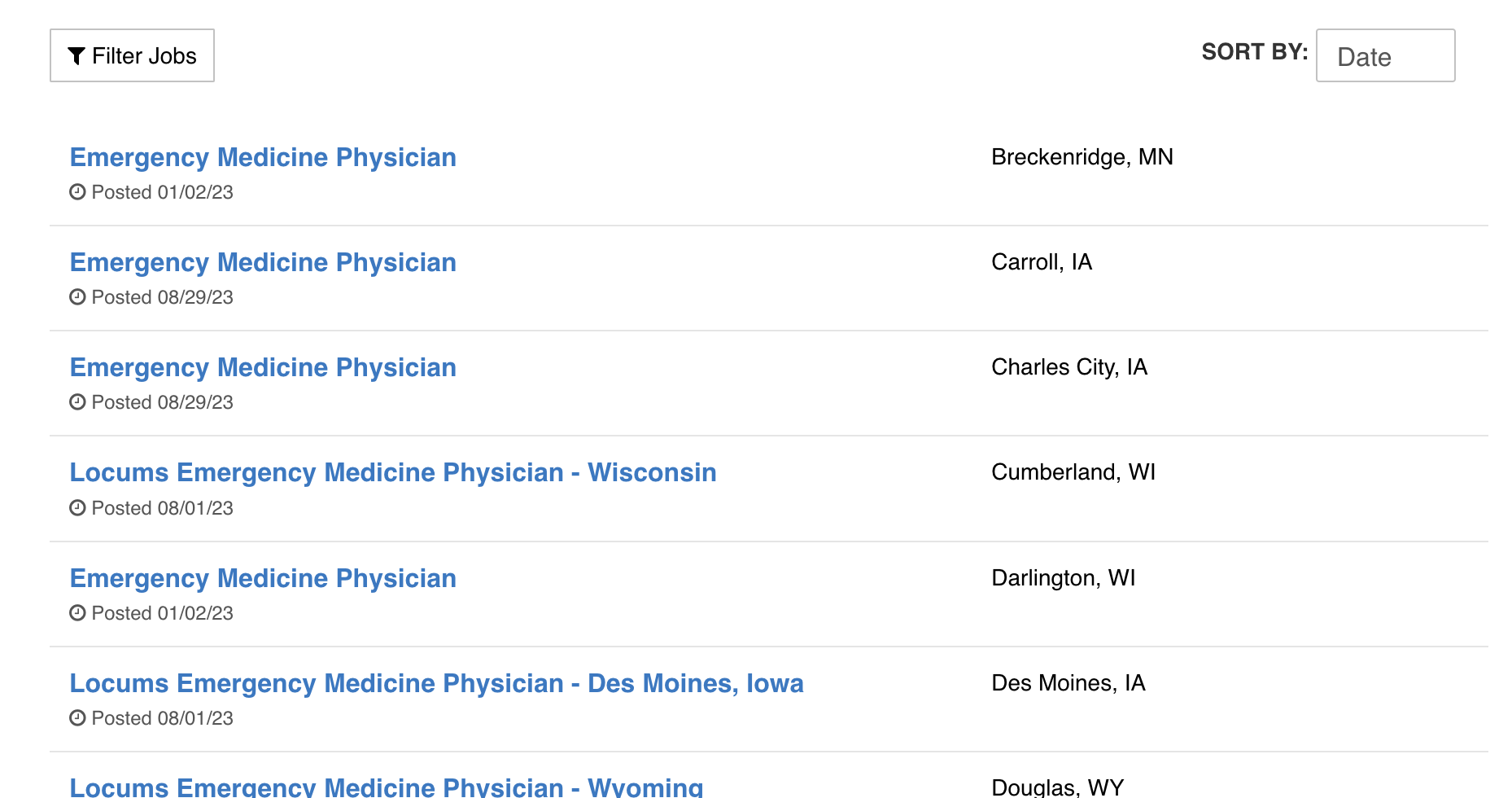

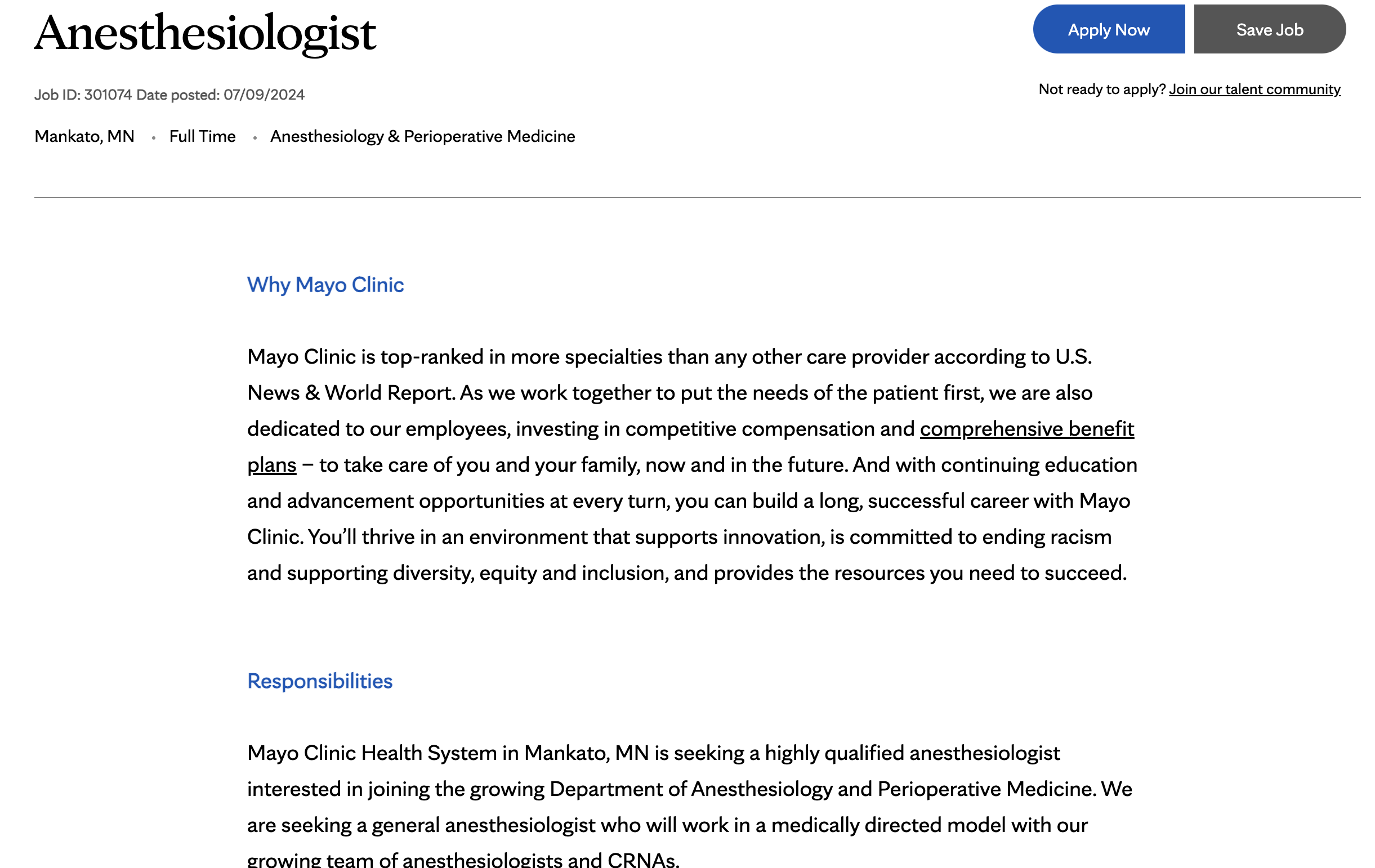

Trong bài viết này, chúng tôi sẽ hướng dẫn bạn xây dựng một trình thu thập thông tin việc làm bằng Python, tập trung vào hai ví dụ về bảng tuyển dụng việc làm: Status Health Partners và Mayo Clinic.

Tại sao nên xây dựng một trình thu thập thông tin việc làm?

Một trình thu thập thông tin việc làm có thể mang lại lợi ích vì một số lý do:

- Người tìm việc: Truy cập vào nhiều cơ hội việc làm hơn.

- Nhà tuyển dụng: Nhận dạng xu hướng và các chức danh việc làm phổ biến.

- Chuyên gia phân tích dữ liệu: Phân tích xu hướng và nhu cầu của thị trường việc làm.

- Tổng hợp dữ liệu: Tạo một bảng tuyển dụng việc làm toàn diện từ nhiều nguồn khác nhau.

Công cụ và thư viện

Đối với dự án này, chúng tôi sẽ sử dụng các công cụ và thư viện sau:

- Python: Ngôn ngữ lập trình mà chúng ta sẽ sử dụng.

- BeautifulSoup: Để phân tích cú pháp nội dung HTML.

- Requests: Để thực hiện các yêu cầu HTTP đến các trang web.

- Pandas: Để lưu trữ và thao tác với dữ liệu đã thu thập.

Bạn có thể cài đặt các thư viện này bằng pip:

pip install beautifulsoup4 requests pandas

Hướng dẫn từng bước

1. Kiểm tra các trang web

Đầu tiên, chúng ta cần kiểm tra các trang danh sách việc làm để hiểu cấu trúc của chúng. Chúng ta tìm kiếm các mẫu trong HTML mà chúng ta có thể sử dụng để trích xuất chi tiết công việc.

2. Gửi yêu cầu HTTP Requests

Chúng ta sử dụng thư viện requests để lấy nội dung HTML của các trang danh sách việc làm.

import requests

from bs4 import BeautifulSoup

def get_page_content(url):

response = requests.get(url)

if response.status_code == 200:

return response.content

else:

return None

url = 'https://jobs.statushp.com/'

page_content = get_page_content(url)

3. Phân tích cú pháp nội dung HTML

Tiếp theo, chúng ta sử dụng BeautifulSoup để phân tích cú pháp nội dung HTML và trích xuất các chi tiết công việc như tiêu đề công việc, công ty, địa điểm và liên kết đến bài đăng tuyển dụng.

def parse_statushp_jobs(page_content):

soup = BeautifulSoup(page_content, 'html.parser')

job_listings = []

for job_card in soup.find_all('div', class_='job-card'):

job_title = job_card.find('h2', class_='job-title').text.strip()

company = 'Status Health Partners'

location = job_card.find('div', class_='location').text.strip()

job_link = job_card.find('a', class_='apply-button')['href']

job_listings.append({

'Job Title': job_title,

'Company': company,

'Location': location,

'Job Link': job_link

})

return job_listings

jobs_statushp = parse_statushp_jobs(page_content)

4. Thu thập từ nhiều nguồn

Chúng ta có thể làm theo quy trình tương tự để thu thập thông tin việc làm từ bảng tuyển dụng việc làm của Mayo Clinic.

url_mayo = 'https://jobs.mayoclinic.org/'

page_content_mayo = get_page_content(url_mayo)

def parse_mayoclinic_jobs(page_content):

soup = BeautifulSoup(page_content, 'html.parser')

job_listings = []

for job_card in soup.find_all('div', class_='job-card'):

job_title = job_card.find('h2', class_='job-title').text.strip()

company = 'Mayo Clinic'

location = job_card.find('div', class_='location').text.strip()

job_link = job_card.find('a', class_='apply-button')['href']

job_listings.append({

'Job Title': job_title,

'Company': company,

'Location': location,

'Job Link': job_link

})

return job_listings

jobs_mayo = parse_mayoclinic_jobs(page_content_mayo)

5. Tổng hợp dữ liệu

Chúng ta kết hợp dữ liệu đã thu thập từ cả hai nguồn vào một Pandas DataFrame duy nhất.

import pandas as pd

all_jobs = jobs_statushp + jobs_mayo

df_jobs = pd.DataFrame(all_jobs)

print(df_jobs)

6. Lưu dữ liệu

Cuối cùng, chúng ta có thể lưu danh sách việc làm đã tổng hợp vào một tệp CSV để phân tích hoặc sử dụng thêm.

df_jobs.to_csv('aggregated_job_listings.csv', index=False)

Kết luận

Việc xây dựng một trình thu thập thông tin việc làm có thể cung cấp những thông tin có giá trị và cơ hội cho người tìm việc, nhà tuyển dụng và chuyên gia phân tích dữ liệu. Bằng cách sử dụng Python và các thư viện như BeautifulSoup và Pandas, bạn có thể tự động hóa quy trình thu thập bài đăng tuyển dụng từ nhiều nguồn và tạo ra một cơ sở dữ liệu toàn diện về các cơ hội việc làm. Hướng dẫn này cung cấp điểm bắt đầu để xây dựng trình thu thập thông tin việc làm của riêng bạn, bạn có thể mở rộng và tùy chỉnh để phù hợp với nhu cầu cụ thể của mình.