newspaper3k - Một gói phần mềm trích xuất tin tức

By khoanc, at: 15:25 Ngày 30 tháng 8 năm 2023

Thời gian đọc ước tính: __READING_TIME__ phút

Mở đầu

Trong thời đại kỹ thuật số nhanh chóng hiện nay, việc cập nhật thông tin và tin tức mới nhất là quan trọng hơn bao giờ hết. Internet đóng vai trò như một kho tàng dữ liệu thời gian thực, và web scraping đã nổi lên như một kỹ thuật mạnh mẽ để khai thác thông tin này. Web scraping liên quan đến việc tự động trích xuất dữ liệu từ các trang web, cho phép chúng ta thu thập thông tin chi tiết, theo dõi xu hướng và đưa ra quyết định sáng suốt.

Có rất nhiều gói scraper mà chúng ta đã đề cập trong bài đăng về scraper nếu bạn muốn xem lại sau.

Một công cụ đáng chú ý khác đã nổi bật trong lĩnh vực scraping tin tức là gói Python "newspaper3k". Được thiết kế với sự hiệu quả và đơn giản, newspaper3k giúp các nhà phát triển dễ dàng scraping bài báo tin tức từ nhiều nguồn khác nhau. Trong bài viết này, chúng ta sẽ đi sâu vào những chi tiết phức tạp của newspaper3k, cung cấp một hướng dẫn toàn diện để khai thác tiềm năng của nó cho việc scraping tin tức hiệu quả.

Hiểu về newspaper3k

Newspaper3k nổi bật như một gói Python linh hoạt và thân thiện với người dùng. Nó được thiết kế đặc biệt để scraping bài báo tin tức từ các ấn phẩm trực tuyến, blog và trang web khác nhau. Với khả năng mạnh mẽ, newspaper3k loại bỏ sự cần thiết phải trích xuất dữ liệu thủ công phức tạp, cho phép người dùng tập trung vào việc trích xuất thông tin chi tiết từ thông tin đã thu thập.

Các tính năng và ưu điểm chính:

- Trích xuất bài viết: Một trong những điểm mạnh chính của newspaper3k là khả năng trích xuất chính xác thông tin cần thiết từ các bài báo tin tức. Điều này bao gồm các chi tiết như tiêu đề bài viết, tác giả, ngày xuất bản và nội dung chính.

- Phát hiện ngôn ngữ: newspaper3k sử dụng các kỹ thuật xử lý ngôn ngữ tự nhiên tiên tiến để tự động phát hiện ngôn ngữ của bài viết. Tính năng này đặc biệt hữu ích cho việc scraping nội dung từ các trang web đa ngôn ngữ.

- Trích xuất từ khóa: Ngoài các chi tiết bài viết cơ bản, newspaper3k có thể xác định các từ khóa và thuật ngữ quan trọng trong bài viết. Điều này giúp trong việc phân loại, phân tích chủ đề và hiểu nội dung.

- Trích xuất hình ảnh: Gói này cũng có thể trích xuất hình ảnh liên quan đến bài viết, cung cấp một cái nhìn tổng quan về các yếu tố hình ảnh đi kèm với nội dung viết.

- Tóm tắt: newspaper3k cung cấp khả năng tạo ra các bản tóm tắt ngắn gọn của bài viết, cung cấp một cái nhìn tổng quan nhanh chóng về những điểm chính của nội dung.

- API thân thiện với người dùng: Đối với các nhà phát triển, tính dễ sử dụng là một tính năng nổi bật. API và tài liệu trực quan của nó làm cho nó dễ tiếp cận với cả người mới bắt đầu và lập trình viên có kinh nghiệm.

Tính dễ sử dụng: Cho dù bạn là một nhà phát triển dày dạn kinh nghiệm hay mới bắt đầu với Python, newspaper3k đều có một quá trình triển khai rất đơn giản. Nó tách rời những phức tạp của web scraping, cho phép người dùng tập trung vào việc truy cập và sử dụng dữ liệu đã scraping. Tính khả dụng này làm cho nó trở thành một công cụ lý tưởng cho những người có trình độ lập trình khác nhau.

Với newspaper3k, các nhà phát triển có thể bỏ qua các quy trình phức tạp liên quan đến việc phân tích thủ công cấu trúc HTML và CSS của các trang web. Thay vào đó, gói này xử lý các tác vụ này đằng sau hậu trường, cung cấp một giao diện hợp lý cho việc trích xuất bài viết.

Về bản chất, newspaper3k đơn giản hóa quá trình scraping, làm cho người dùng có thể nhanh chóng thu thập các bài báo tin tức từ nhiều nguồn khác nhau mà không cần kiến thức lập trình chuyên sâu.

Sự đơn giản trong hành động:

Một trong những khía cạnh hấp dẫn nhất của newspaper3k là sự đơn giản của nó. Ngay cả khi bạn mới bắt đầu với web scraping, bạn cũng có thể nhanh chóng khai thác khả năng của nó. Hãy xem một ví dụ cơ bản về cách sử dụng newspaper3k để trích xuất thông tin từ một bài báo tin tức:

from newspaper import Article

# Khởi tạo một đối tượng Article với URL của bài báo tin tức

article_url = "https://example.com/news-article"

article = Article(article_url)

# Tải xuống và phân tích bài viết

article.download()

article.parse()

# Trích xuất thông tin

title = article.title

author = article.authors

publish_date = article.publish_date

content = article.text

# In thông tin đã trích xuất

print("Title:", title)

print("Author:", author)

print("Publish Date:", publish_date)

print("Content:", content)

Chỉ với một vài dòng code, newspaper3k thu thập và sắp xếp thông tin cần thiết từ một bài báo tin tức, thể hiện cách tiếp cận thân thiện với người dùng của nó đối với web scraping.

Cài đặt và thiết lập

Trước khi bạn có thể bắt đầu vào thế giới scraping tin tức với newspaper3k, bạn cần thiết lập gói này trên hệ thống của mình. Rất may, quá trình cài đặt rất đơn giản. Làm theo các bước sau để bắt đầu:

-

Cài đặt Python: Đảm bảo rằng bạn đã cài đặt Python trên hệ thống của mình. Nếu chưa, bạn có thể tải xuống và cài đặt nó từ trang web chính thức của Python (https://www.python.org/).

-

Cài đặt newspaper3k: Mở thiết bị đầu cuối hoặc dấu nhắc lệnh của bạn và sử dụng lệnh pip sau để cài đặt newspaper3k:

pip install newspaper3k

-

Cài đặt phụ thuộc: Tùy thuộc vào hệ thống của bạn, bạn có thể cần cài đặt các phụ thuộc bổ sung để newspaper3k hoạt động chính xác. Ví dụ: trên các hệ thống dựa trên Ubuntu, bạn có thể cài đặt các gói sau:

sudo apt-get install libxml2-dev libxslt-dev

-

Kiểm tra cài đặt: Để đảm bảo rằng newspaper3k đã được cài đặt đúng cách, hãy chạy một script Python đơn giản trong thiết bị đầu cuối của bạn:

from newspaper

import Article

article = Article("https://example.com")

print(article.title)

Nếu bạn thấy tiêu đề của bài báo được in trong thiết bị đầu cuối, chúc mừng – newspaper3k đã được cài đặt thành công và sẵn sàng sử dụng!

Sử dụng cơ bản

Bây giờ newspaper3k đã hoạt động, hãy cùng khám phá cách sử dụng cơ bản của nó. Chúng ta sẽ đi qua quy trình scraping một bài báo tin tức và trích xuất thông tin liên quan từ nó.

-

Nhập mô-đun:

Bắt đầu bằng cách nhập mô-đun cần thiết ở đầu script Python của bạn:

from newspaper import Article -

Tạo đối tượng Article:

Để scraping một bài báo tin tức cụ thể, bạn cần tạo một đối tượng

Articlevà cung cấp URL của bài báo đó làm đối số:article_url = "https://example.com/news-article"

article = Article(article_url) -

Tải xuống và phân tích:

Tiếp theo, bạn cần tải xuống và phân tích bài báo để trích xuất nội dung của nó:

article.download()

article.parse() -

Trích xuất thông tin:

Sau khi bài báo đã được tải xuống và phân tích, bạn có thể dễ dàng trích xuất nhiều thông tin khác nhau bằng các thuộc tính có sẵn:

- Tiêu đề:

article.title - Tác giả:

article.authors - Ngày xuất bản:

article.publish_date - Nội dung:

article.text

Ví dụ:

title = article.title

author = article.authors

publish_date = article.publish_date

content = article.text - Tiêu đề:

-

In thông tin đã trích xuất:

Cuối cùng, bạn có thể in thông tin đã trích xuất để phân tích hoặc xử lý thêm:

print("Title:", title)

print("Author:", author)

print("Publish Date:", publish_date)

print("Content:", content)

Với các bước này, bạn đã thành công trong việc scraping và trích xuất thông tin từ một bài báo tin tức bằng newspaper3k. Việc sử dụng cơ bản này cung cấp một nền tảng vững chắc để khám phá các tính năng nâng cao và tùy chỉnh mà gói này cung cấp.

Các tính năng nâng cao

Mặc dù newspaper3k rất xuất sắc trong việc scraping bài báo tin tức cơ bản, nhưng nó cũng cung cấp một loạt các tính năng nâng cao giúp tăng tính linh hoạt và tiện ích của nó. Hãy cùng khám phá một số tính năng này:

-

Phát hiện ngôn ngữ:

Bài báo tin tức có thể được viết bằng nhiều ngôn ngữ khác nhau. newspaper3k có thể tự động phát hiện ngôn ngữ của một bài viết, điều này đặc biệt hữu ích cho việc scraping nội dung đa ngôn ngữ. Để truy cập ngôn ngữ đã phát hiện:

detected_language = article.meta_lang -

Trích xuất từ khóa:

Trích xuất từ khóa từ một bài viết có thể cung cấp những hiểu biết giá trị về nội dung của nó. Với newspaper3k, bạn có thể dễ dàng lấy lại các từ khóa hàng đầu:

article.nlp() # chạy NLP

keywords = article.keywords -

Trích xuất hình ảnh:

Hình ảnh thường đi kèm với các bài báo tin tức. Sử dụng newspaper3k, bạn có thể trích xuất hình ảnh chính liên quan đến bài viết:

main_image_url = article.top_image -

Xử lý ngoại lệ:

Web scraping có thể gặp phải nhiều vấn đề khác nhau, chẳng hạn như lỗi mạng hoặc URL không hợp lệ. newspaper3k cung cấp các cơ chế xử lý ngoại lệ để giúp bạn xử lý các tình huống như vậy một cách uyển chuyển. Kẹp code của bạn trong một khối try-except:

from newspaper import ArticleException

try:

# Code scraping của bạn ở đây

raise ArticleException('test')

except ArticleException as e:

print("Error:", e)

Bằng cách tận dụng các tính năng nâng cao này, bạn có thể tạo ra các ứng dụng scraping toàn diện và sâu sắc hơn. Cho dù bạn đang phân tích xu hướng ngôn ngữ, xác định các chủ đề chính hay thu thập hình ảnh liên quan, newspaper3k đều cung cấp các công cụ bạn cần.

Tùy chỉnh và cấu hình

Tính linh hoạt của Newspaper3k mở rộng đến các tùy chọn tùy chỉnh của nó. Bạn có thể cấu hình nhiều khía cạnh của gói để điều chỉnh nó cho phù hợp với nhu cầu scraping của mình:

-

Tùy chọn cấu hình:

Bạn có thể cấu hình hành vi của newspaper3k bằng cách sửa đổi cài đặt cấu hình của nó. Ví dụ: bạn có thể tắt một số tính năng như phân tích văn bản đầy đủ hoặc ghi nhớ để tiết kiệm tài nguyên:

from newspaper import Config

config = Config()

config.memoize_articles = False

config.fetch_images = False -

Mạo danh User-Agent:

Một số trang web có thể hạn chế hoặc sửa đổi nội dung dựa trên user agent. Bạn có thể đặt user agent tùy chỉnh để tránh bị phát hiện:

config = Config()

config.browser_user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3" -

Định dạng đầu ra:

Bạn có thể sửa đổi định dạng đầu ra cho ngày tháng bằng tùy chọn cấu hình

publish_date_formatter:config = Config()

config.publish_date_formatter = lambda date: date.strftime("%Y-%m-%d %H:%M:%S")

Bằng cách cấu hình newspaper3k cho phù hợp với yêu cầu của bạn, bạn có thể đảm bảo rằng các hoạt động scraping của bạn vừa hiệu quả vừa hiệu quả.

Khi bạn khám phá các tính năng nâng cao và tùy chọn tùy chỉnh này, bạn sẽ mở khóa toàn bộ tiềm năng của newspaper3k và nâng các dự án scraping tin tức của bạn lên một tầm cao mới. Trong các phần tiếp theo của bài viết này, chúng ta sẽ đi sâu vào các thực tiễn tốt nhất cho việc scraping có đạo đức và scraping quy mô lớn hiệu quả, cung cấp cho bạn một bộ công cụ toàn diện để trích xuất dữ liệu tin tức thành công.

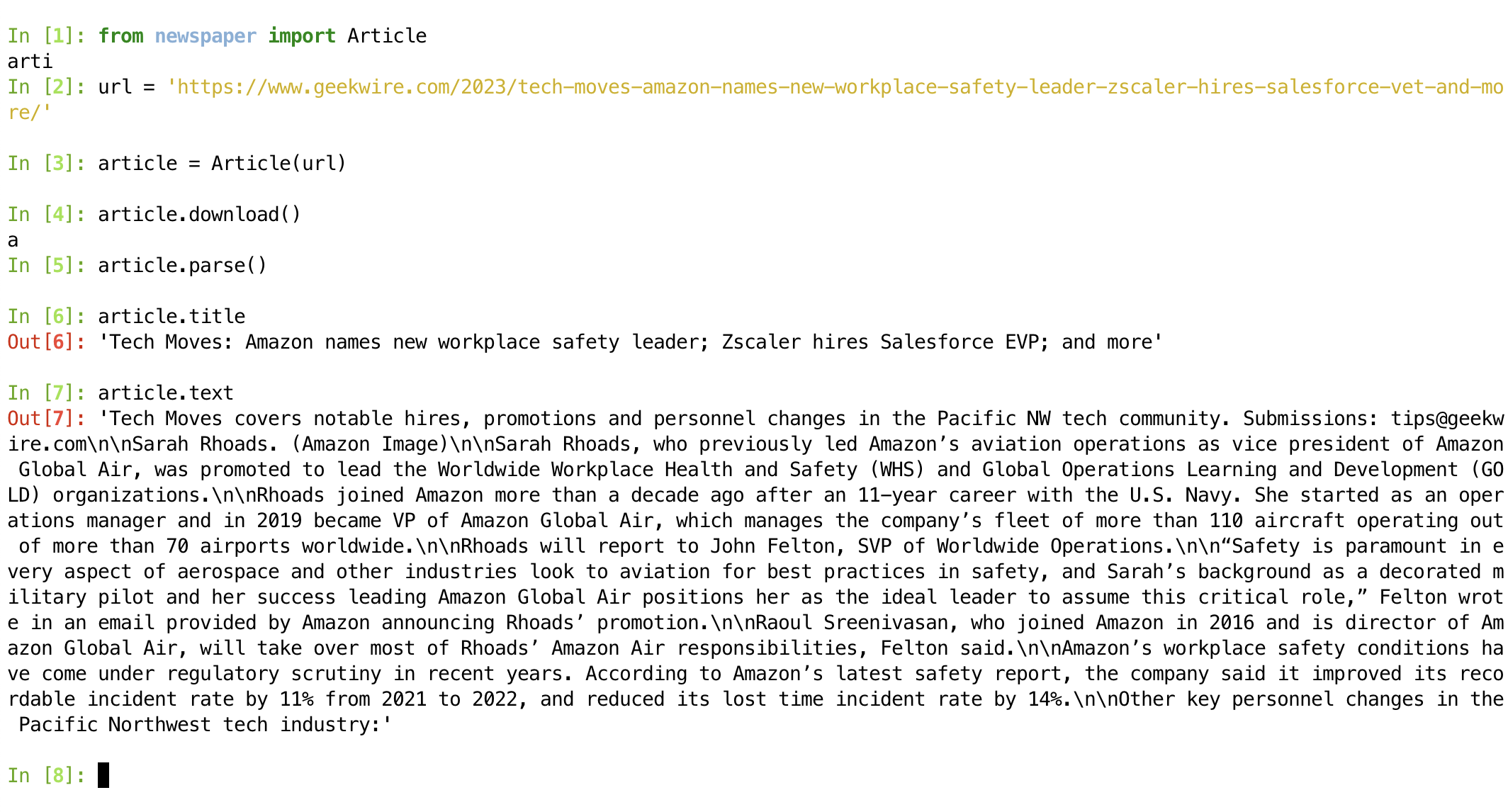

Lỗi có thể xảy ra

Có hai vấn đề chính mà chúng ta có thể gặp phải

- Nội dung được hiển thị bằng JavaScript: Nếu một trang web dựa nhiều vào JavaScript để hiển thị nội dung của nó, newspaper3k có thể gặp khó khăn trong việc trích xuất dữ liệu chính xác, vì nó chủ yếu xử lý HTML tĩnh.

- Văn bản đôi khi không hợp lệ: Có một số trang web/URL có nhiều nội dung văn bản, tuy nhiên, kết quả

Article.textđã phân tích cú pháp là không chính xác. Ví dụ: https://www.geekwire.com/2023/tech-moves-amazon-names-new-workplace-safety-leader-zscaler-hires-salesforce-vet-and-more/

Xử lý scraping quy mô lớn

Khi các dự án web scraping của bạn phát triển, bạn có thể thấy mình phải xử lý một số lượng lớn bài báo từ nhiều nguồn khác nhau. Việc quản lý hiệu quả khối lượng dữ liệu này đòi hỏi sự lập kế hoạch và xem xét cẩn thận. Dưới đây là một số chiến lược để xử lý scraping tin tức quy mô lớn bằng newspaper3k:

-

Điều tiết yêu cầu:

Các trang web có thể có các giới hạn tốc độ hoặc các biện pháp chống scraping. Để tránh làm quá tải máy chủ và có thể bị chặn, hãy triển khai điều tiết yêu cầu bằng cách giới thiệu độ trễ giữa các yêu cầu:

import time delay = 2 # Độ trễ tính bằng giây giữa các yêu cầu

for article_url in article_urls:

article = Article(article_url)

article.download()

article.parse()

# Code xử lý của bạn ở đây...

time.sleep(delay) -

Xử lý hàng loạt:

Nếu bạn có một số lượng lớn bài báo cần scraping, hãy xem xét việc triển khai xử lý hàng loạt. Nhóm các bài báo thành các nhóm nhỏ hơn và xử lý từng nhóm theo trình tự để quản lý tài nguyên hiệu quả.

Đa luồng được hỗ trợ sẵn -

Scraping không đồng bộ:

Lập trình không đồng bộ cho phép bạn gửi nhiều yêu cầu cùng một lúc, cải thiện tốc độ scraping. Các thư viện như

asynciovàaiohttpcó thể được kết hợp với newspaper3k để scraping không đồng bộ. -

Lưu trữ dữ liệu:

Xác định cách bạn sẽ lưu trữ dữ liệu đã scraping. Sử dụng cơ sở dữ liệu hoặc định dạng tệp như CSV hoặc JSON có thể giúp bạn sắp xếp và truy cập thông tin dễ dàng.

-

Xử lý lỗi và ghi nhật ký:

Scraping quy mô lớn có thể gặp phải nhiều lỗi khác nhau. Triển khai các cơ chế xử lý lỗi và ghi nhật ký kỹ lưỡng để theo dõi các vấn đề và khắc phục sự cố hiệu quả.

Bằng cách thực hiện các chiến lược này, bạn có thể tự tin mở rộng các dự án scraping tin tức của mình trong khi vẫn duy trì hiệu quả và tuân thủ các thực tiễn scraping có đạo đức.

Thực tiễn tốt nhất

Khi bạn bắt đầu hành trình scraping tin tức của mình với newspaper3k, điều quan trọng là phải tuân theo các thực tiễn tốt nhất để đảm bảo việc trích xuất dữ liệu có đạo đức và có trách nhiệm:

-

Tôn trọng chính sách trang web:

Luôn xem xét và tuân thủ các điều khoản sử dụng và chính sách scraping của trang web. Tránh scraping các trang web mà rõ ràng là cấm scraping hoặc có các điều khoản chống lại điều đó.

-

Hạn chế tốc độ:

Triển khai hạn chế tốc độ và độ trễ để tránh làm quá tải các trang web bằng các yêu cầu quá mức. Scraping có trách nhiệm duy trì tính toàn vẹn của các trang web mà bạn đang trích xuất dữ liệu.

-

User Agent và Robots.txt:

Đặt một user agent phù hợp để xác định bot scraping của bạn và tôn trọng các tệp "robots.txt" của trang web, tệp này chỉ định phần nào của trang web có thể được thu thập.

-

Bản quyền và ghi công:

Nếu bạn có kế hoạch sử dụng dữ liệu đã scraping để phân phối công khai, hãy đảm bảo bạn ghi công nội dung cho nguồn gốc ban đầu của nó và tôn trọng luật bản quyền.

-

Thỏa thuận sử dụng dữ liệu:

Nếu bạn đang scraping dữ liệu cho mục đích thương mại hoặc thay mặt người khác, hãy xem xét việc soạn thảo một thỏa thuận sử dụng dữ liệu nêu rõ các điều khoản thu thập, lưu trữ và sử dụng dữ liệu.

Bằng cách tuân thủ các thực tiễn tốt nhất này, bạn sẽ góp phần duy trì tính toàn vẹn của các trang web, bảo vệ các dự án scraping của bạn khỏi các vấn đề pháp lý và thúc đẩy mối quan hệ tích cực giữa những người scraping dữ liệu và nhà cung cấp nội dung.

Kết luận

Trong lĩnh vực web scraping, newspaper3k nổi bật như một gói Python linh hoạt và thân thiện với người dùng được thiết kế cho việc trích xuất bài báo tin tức. Thông qua hướng dẫn toàn diện này, chúng ta đã đi từ những điều cơ bản về cài đặt và sử dụng đến các tính năng nâng cao, tùy chọn tùy chỉnh, chiến lược scraping quy mô lớn và các thực tiễn tốt nhất cho việc scraping có trách nhiệm.

Được trang bị kiến thức này, bạn đã sẵn sàng bắt đầu các dự án scraping tin tức của riêng mình, tận dụng sức mạnh của newspaper3k để thu thập thông tin thời gian thực, trích xuất thông tin chi tiết và đóng góp vào lĩnh vực bạn quan tâm. Khi bạn tiếp tục khám phá lĩnh vực trích xuất dữ liệu, hãy nhớ tuân thủ các tiêu chuẩn đạo đức và tôn trọng các trang web mà bạn đang scraping. Thế giới tin tức nằm trong tầm tay bạn – đã đến lúc bắt đầu scraping!